The Real Science of Intelligence - A Love Letter to Cybernetics

Why the early cyberneticists were decades ahead of their time

When reading some of Stanislaw Lem’s stories as a young adult, I first stumbled across the word ‘cyberneticists’ - basically denoting a tech wizard in the eyes of my younger self. Ever since reading Andrew Pickering’s The Cybernetic Brain - Sketches for another Future I think that this early impression was basically justified. In many ways cybernetics is the less successful but actually more brilliant twin of the poster child discipline of artificial intelligence (AI). Here I will explore why cybernetics was in many ways superior to AI, why it can still teach us a few important insights about the mind, and why cybernetics is relevant to the question of whether intelligent machines are dangerous for their creators.

Two Ways of Thinking about Intelligence

The beginning of cybernetics coincides with the publication of Norbert Wiener’s book Cybernetics: Or Control and Communication in the Animal and the Machine in 1948. The book was an heroic attempt to combine insights from various fields - statistical physics, neuroscience, communication theory, electronics, psychiatry and philosophy - into a single coherent conceptual framework for thinking about the mind. Perhaps the central overarching idea was that of control by feedback. Intelligent systems, the argument goes, are good at controlling their environment in a very general sense. Steering the world from states considered ‘bad’ to states considered ‘good’ by observing and intervening might be considered the hallmark trait of intelligence per se. And control, says Wiener, is always realized using negative feedback, meaning tracking how well a state conforms to a system’s preferences and acting on divergences. The simplest example of this is a thermostat that keeps the temperature in a room constant. Wiener and his followers believed that a science of intelligence needed to be built on this simple foundation.

The founding of the discipline of artificial intelligence is conventionally dated to the Dartmouth Summer Research Project on Artificial Intelligence, organized by John McCarthy, Marvin Minsky, Claude Shannon, and Nathaniel Rochester in 1956, eight years after the release of Wiener’s book. AI also wanted to be a science of intelligence, centrally built on the idea that the processes of thought, if rigorously defined, could be executed by the newly available tool - the digital computers. Early research focused on problem solving, symbolic reasoning and the mechanization of mathematical proofs.

To make a long story short, cybernetics saved the day and AI, after a long series of failures and misadventures, is basically dead today…

Wait what? OK, the story is a little more complicated than that. The fact of the matter is that basically all the ideas that got AI started, except perhaps the most foundational one that computers can be made to think, have fallen out of favor. It is just incredibly hard, if not impossible, to write computer code from scratch that solves some reasonably big class of problems! And so the paradigm of AI research propagated by its founders is now often referred to as ‘GOFAI’ - Good Old-Fashioned AI to distinguish it from contemporary AI, the main difference being that the latter actually works.

On the other hand, the central ideas behind cybernetics have been vindicated. We can look at this from the perspective of the mind sciences, where predictive processing is all the rage now. Basically, the brain is conceived as an error minimization machine that constantly tries predicting the next sensory stimulus. All the brain ever sees of the world are the errors that are produced by the clash of predictions with the real world. Sound familiar? Of course, errors just are another way of saying ‘negative feedback’. So if predictive processing really offers a ‘unified brain theory’ as many researchers today believe, then the cyberneticists were right all along. This article by Anil Seth nicely summarizes the convergence of predictive processing, the Bayesian brain and cybernetic thought. The book The Predictive Mind by Jakob Hohwy is the best introduction to the field I know.

But we can also see the vindication of cybernetics if we look at contemporary AI! For the GOFAI approach has almost entirely been washed away by the flood called ‘deep learning revolution’, that started in earnest when Geoffrey Hinton’s team achieved a stunning breakthrough in the application of artificial neural networks to automated image recognition in 2012. This resulted in a Nobel prize in physics in 2024. Since then the study of neural networks is the central topic of contemporary AI. And neural networks are just an algorithm for making tiny adjustments to a network of artificial neurons based on prediction errors - they are feedback machines! Hinton’s discovery is best described as clever math for ‘attributing’ the negative feedback to the neuron that caused it in a hierarchically structured network. But the basics of neural networks were invented by cyberneticists Warren McCullough and Walter Pitts in their 1943 article A Logical Calculus of the Ideas Immanent in Nervous Activity (great paper title btw.). McCullough was a close collaborator of Wiener’s.

Even worse for AI, it was one of the godfathers of the field, Marvin Minsky, that basically single-handedly ended the research into neural networks, showing that a single layer neural network could not handle certain logical tasks. As the result emphatically does not apply to deep neural networks with many layers, while the basic result was valid, the impact of the result was devastating to progress. And in a way, AI after the deep learning revolution, should have changed its title to cybernetics as it is built more on cybernetic foundations than on the foundational ideas originally associated with the AI research program.

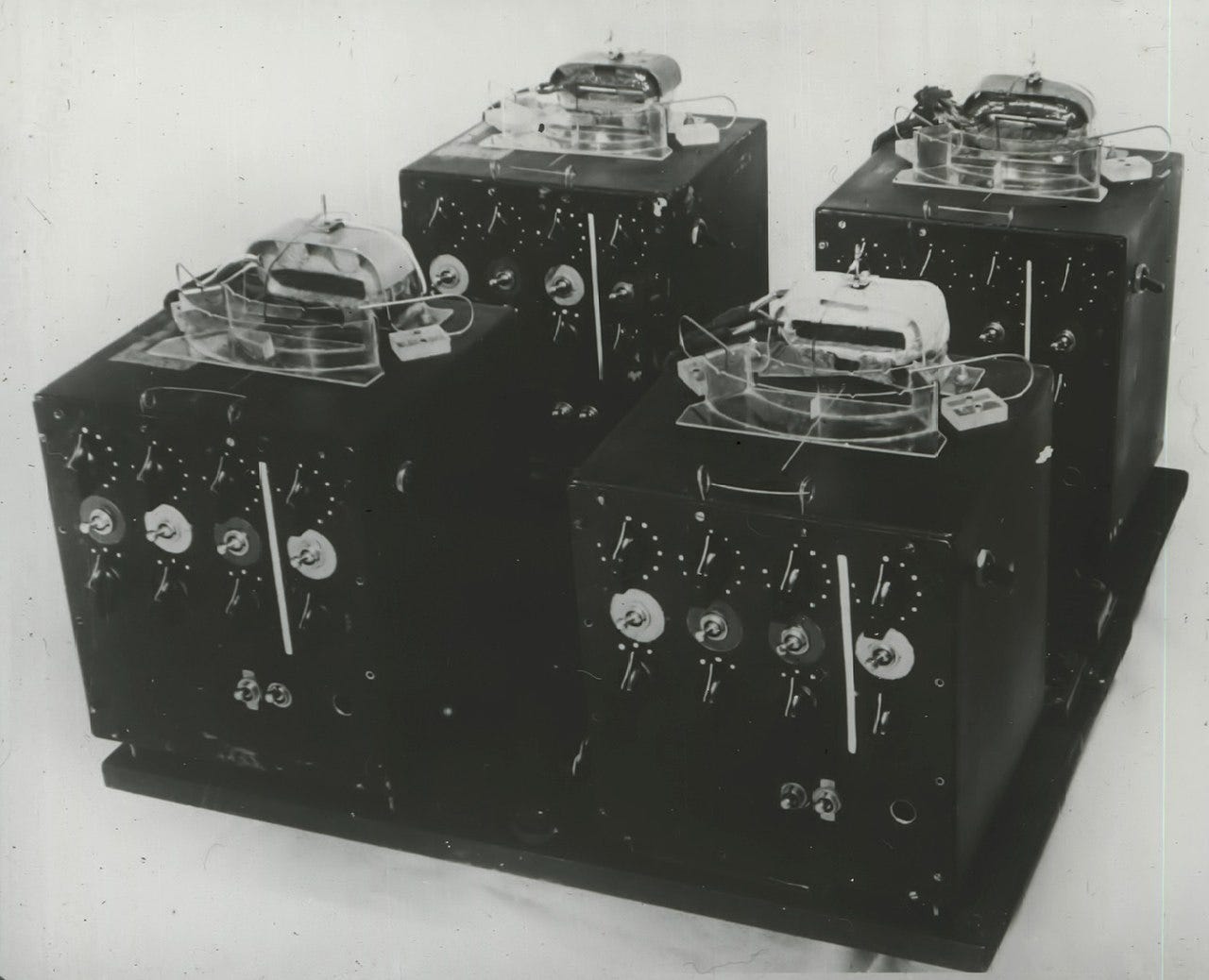

So why did the AI paradigm (or at least the name), despite all its flaws, outlive cybernetics? Certainly, this is a complex question and I suppose I can’t give a complete answer. But I can definitely identify one central, perhaps decisive factor: The rise of digital computers. Early digital computers, despite their ridiculous calculation speeds when compared to their contemporary equivalents, were tailor made for implementing the ideas of early AI. On the other hand, the simulation of complex self-organizing feedback processes in digital computers was infeasible at the time. Instead, the cyberneticists built analog computers like Ross Ashby’s homeostat that directly implemented the principles of their craft (see figure). The results were interesting, but they were hard to scale. And thus cybernetics slowly dissolved into other disciplines, its concepts being picked up in various other sciences like systems- and control theory. What the heirs of cybernetics are doing, what is called second-wave cybernetics, often looks more like philosophy than like science. AI on the other hand could always underpin its speculation with more or less successful gadgets and could, despite two big crises known as ‘AI winters’, emerge as the dominant discipline. And this is the story why now I can just be a boring ‘AI researcher’ instead of a machine wizard cyberneticist.

If the history of cybernetics has a point then it is that being right is not enough…

It is not unreasonable to suspect that, if cybernetics had dominated, we might have gotten the deep learning revolution two decades earlier. Hinton’s breakthrough was revolutionary in practice, but as far as the math behind it is concerned, it is certainly no magic (the basic point was seeing that in the application of the chain rule to the error term of the network, you could reuse the partial derivatives you already calculated for other layers). Maybe we would have gotten something even more interesting than ChatGPT.

Overcoming Binaries

So far I have surveyed why I think that the triumph of AI over cybernetics is one of the great failures in the history of science. But there are still a few more lessons left to learn I think.

An interesting feature of cybernetics is that the framework blasts through many conceptual binaries that we are often trapped in. The most important one may be that of organism-machine. The first chapter of Wiener’s book is called Newtonian and Bergsonian Time. Henri Bergson was a famous philosopher at the time, known for his defense of vitalism, the view that there is an irreconcilable difference between living beings and machines. Living beings, Bergson argued, can only be understood in terms of their relation to an experiential flow of ‘lived time’. Mechanical thought cannot grasp this lived time because it can only conceive of it as another spatial dimension. And this is why Newtonian mechanics is a time-symmetrical theory - the concept of past and future are alien to it. Wiener proposes to abolish this binary between symmetrical Newtonian time and ungraspable asymmetric Bergsonian time using concepts of thermodynamics. As is well accepted today, the asymmetry of time is best accounted for in terms of the increase of entropy, roughly a measure for the degree of disorder in the world. Introducing concepts of entropy, or order and disorder, allows us to have a real space in our world view for living beings without introducing some strange irreducible life force: Living beings are ‘islands of order’ trying to resist the fall into disorder. The intuitions of the vitalists are in a way validated as you cannot think of organism as machines in the interlocking-gears sense, but still they are patterns in the flow of matter.

I think this basic binary of organism-machine is basically overcome today. But some questionable binaries are still adhered to today.

Teleology-Mechanism

An important one is that of teleology and mechanism. There is still a bias among scientists and philosophers to think that an explanation of a process in terms of goals is somehow inherently inferior to an explanation of a process in terms of a mechanism. Perhaps the most blatant example of this is John Searle, who basically thinks that all attributions of goals to biological processes are basically as-if ascriptions, except in the case of processes that are accompanied by consciousness (see his Rediscovery of the Mind).

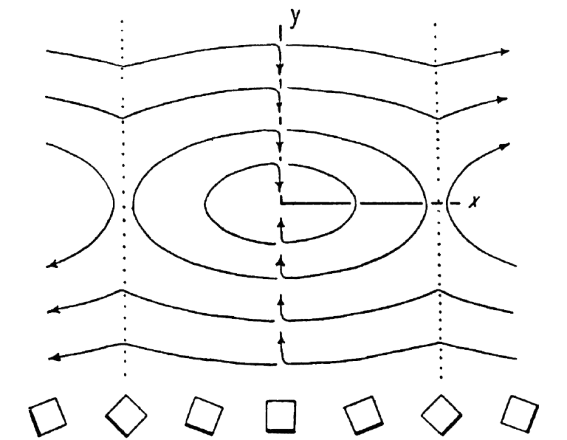

If cybernetic thought is right, that is, if organisms (natural or artificial) are just networks on interlocking feedback loops, all organisms will, in the great majority of circumstances, tend to develop towards certain stable states. And this is precisely the kind of process that we can describe as goal-seeking! For the cyberneticists, ‘goal’, or if you want, ‘good’ and ‘bad’, are just labels we attach to the different poles of these stabilization processes.

One of the coolest results of the cybernetic trade is maybe Ashby’s construction of the homeostat (see image above). This analog computer is basically a unit that, upon certain input, tends towards stable states, and produces outputs depending on its internal state. The cool thing about it is that if you put multiple homeostats together such that their outputs stimulate each other, and perturb the whole thing from the outside, then the whole arrangement will cycle through different states until it has found a ‘strategy’ to globally cancel the input perturbations! Thus, the whole system looks for a strategy for self-stabilization because until such a strategy is found, the sub-systems, in the words of Ashby, will ‘veto’ the global strategy by destroying its order.

I actually think the homeostat is still a potential object of study today. Its main weakness is that it cannot learn from its environment with a rate larger than one bit per input signal - it either finds a globally adaptive state or it does not. But maybe, using today’s mathematical tools, we could actually build a homeostat network that can learn interesting things!

Be that as it may. The important thing is that describing the homeostat as seeking a stable state is not an inferior expression of its activity than a detailed account of the activity of its internal switches and magnets. In fact, it is a more economic way of saying the same thing, abstracting away material irrelevancies. With a cybernetic frame of mind, you can embrace teleological language without fearing to fall back into vitalism.

Knowing That-Knowing How

Wars rage within the mind sciences. Their tolls are intense. Piles of paper, years of thought-time wasted. The wars are the representation wars, called like that by Axel Constant. The basic issue fought over is whether mental activity, at its core, is representational or not. That is, are mental phenomena always, or at least mostly, best described as representing some state of affairs? Can mental processes always be ascribed with a content? The positions range from theorists who hold representations to be the subject of the mind sciences per se (like Jerry Fodor) to theorists who think that representation is something that basically only happens in the usage of language while most cognition is best considered as a from of pragmatic coping (like Daniel Hutto).

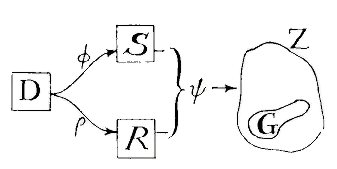

This brings us to perhaps the coolest theoretical results of the cybernetic tradition, Ashby’s and Contant’s good regulator theorem (GRT). In words it tells us that:

Every good regulator of a system must be a model of that system. (Contant and Ashby, 1970)

The central message of the GRT is that, if you have a controlled system and a controlling system, where the controlled system is subject to random fluctuation, and control means keeping the controlled system in a preferred state, there will be an isomorphism between controlling and controlled system. It is not that hard to see why that must be the case: If the controlled system diverges from its proper state due to a disturbance, if you want to counteract the disturbance, you need to know the ‘direction’ the disturbed system will be evolving in to counteract it.

While it would need a paper-length essay to elaborate the point, I think the GRT holds the potential for ending the representation wars: If we consider control as the underlying phenomenon, then it is clear that there will always be some kind of isomorphism involved between controller and controlled. We can deduce this a priori. Thus, all mental phenomena can be described in representational terms of some form. One can still fight about whether this is always helpful, but at least the deep questions of ontology dissolve.

In a way, the representation wars seems like a remnant of the impact of early AI, which directly influenced guys like Fodor. If you think of cognition in terms of symbol processing and the representation of propositions, then the claim that something is a representational system is quite a strong one. If you think about it cybernetically, it can become an almost trivial fact about the functioning of self-stabilizing systems.

Within-Without

Here is where I should tell you that the cyberneticists were well ahead of their peers in appreciating the importance of thinking of the mind as embedded in its environment, as contemporary ‘enactivist’ emphasize. The problem is that I sometimes feel that enactivism can be a dead end - in my opinion, from the perspective of the mind sciences, the environment often is best treated as a kind of black box that takes actions as inputs and produces stimuli. But my goal is to tell you that the cyberneticists were ahead of their time and enactivism is all the rage in the mind sciences. So I will bracket my personal biases. Here we go:

There can’t be a proper theory of the brain until there is a proper theory of the environment as well… the subject has been hampered by our not paying sufficiently serious attention to the environmental half of the process… the “psychology” of the environment will have to be given almost as much thought as the psychology of the nerve network itself. - Ross Ashby at the 1952 Macy Conference, see Pickering p. 105

Perspectives like these emerge naturally when you think of thought as an extension of the self-stabilizing properties of organisms. Humans don’t look that self-stabilizing anymore when you place them into the heart of a star. Rather, being self-stabilizing is a property of humans they have relative to a certain set of environments and understanding the self-stabilizing property of humans is not a matter of merely understanding their internal cognitive processes, but of understanding how these processes are embedded in a certain ecological niche.

Programmed-Spontanous

Finally, more than their peers in artificial intelligence, the cyberneticists were aware of the potentially dangerous implications of their craft. Later in his life, Wiener wrote a book on the societal implications of automation through cybernetics. And Ashby continuously warned his readers about the dangers inherent in building a system that is more intelligent than you:

[T]here is no difficulty, in principle, in developing synthetic organisms as complex, and as intelligent as we please. But we must notice two fundamental qualifications; first, their intelligence will be an adaptation to, and a specialization towards, their particular environment, with no implication of validity for any other environment such as ours; and secondly, their intelligence will be directed towards keeping their own essential variables within limits. They will be fundamentally selfish. So we now have to ask: In view of these qualifications, can we yet turn these processes to our advantage? (Ashby, Principles of the Self-Organizing System, 1962)

Importantly, the awareness of danger grew from the superior theoretical underpinnings of their work. This is the reason why we find no comparable worries in the pioneers of AI. If you think of intelligence as a matter of programming instructions directly into the system then the fear that the system might behave spontaneously in a way you did not intend will seem not very pressing. This might also be why worries about machine intelligence in the earlier 2000s often focused on ‘be careful what you wish for’ scenarios. Often AI danger was (and still is) discussed in terms of the paperclip thought experiment where you instruct a machine to make the production of paperclips more efficient and it starts turning everything, including you and your friends, into paperclips. The danger, if you believe in the classical AI paradigm, is setting the wrong goals.

By now it is generally accepted in the AI safety community that the problem of making the system even accept the goals you set is just as big a problem as choosing your goals (see for instance this paper). From the cybernetic perspective, this would have been clear from the start. As you can see in the Ashby quote, as intelligence is fundamentally a form of stabilization, every intelligence is to some degree selfish. A hyper-homeostat will find an equilibrium and if you disturb it it might find a way to bring you ‘into equilibrium’.

Many interesting tales from the world of cybernetics are left to be told. The story how cyberneticist Stafford Beer went to Salvador Allende’s Chile to implement cybernetic socialism. The story of how Gregory Bateson tried to revolutionize psychiatry based on cybernetics. Also, did you know neural networks have grown out of an explicitly Kantian and idealist semi-philosophical research project? And there is much more. But for now, thanks for reading The Anti-Completionist! For more thoughts on philosophy, cybernetics, science fiction and general nerdy stuff, please subscribe.

What to read?

Got interested and want to get into the subject? Here are a few recommendations:

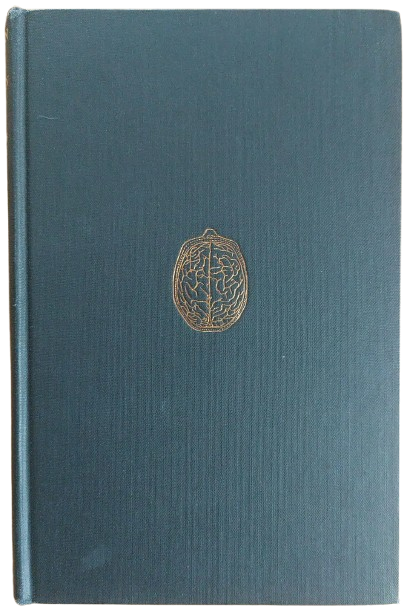

Wiener’s Cybernetics is a classic. However it is math-heavy and I would not start here if differential equations are not your thing.

Ashby’s Design for a Brain is just awesome, though not what we today would consider popular science. However it does not have mathematical (or other) prerequisites. It starts extremely basic.

Pickering’s The Cybernetic Brain is a great read, though he is more interested in the societal and philosophical implications than in scientific details.

Here you will find a collection of Ashby’s aphorisms, which are often quite insightful (he would have had great fun on X/twitter…)

The GRT paper is actually quite readable, too.